Upload Existing Events Via CSV

On this page

This feature is in beta. It might not work for your use case.

Capturing events through the Insights API is important to continuously train and improve the Recommend models. Collecting enough events can take some time. You can import past user events from a CSV file to benefit from Recommend earlier.

Format of the CSV file with historical events# A

The CSV file with historical events must have the following format:

- The CSV file must be 100 MB or less in size.

- The first row must contain the strings

userToken,timestamp,objectID,eventType, andeventName. Any extra columns are ignored. - Each row represents an event tied to a single

objectID. - The timestamps should cover a period of at least 30 days. Any data older than 90 days is ignored.

Each event must have the following properties:

userToken |

a unique identifier for the user session |

timestamp |

the date of the event in a standard format: ISO8601 or RFC3339 (with or without the time) |

objectID |

a unique identifier for the item the event is tied to |

eventType |

the type of event (either “click” or “conversion”) |

eventName |

a name for the event, which can be the same as eventType |

Upload historical events for Recommend# A

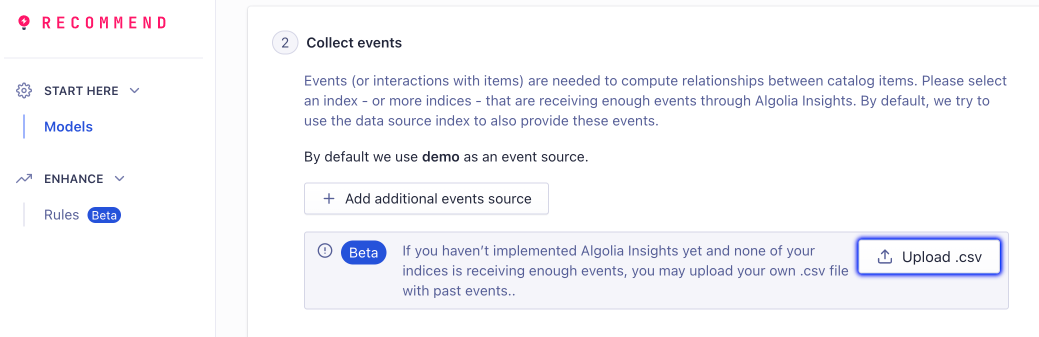

To import historical events from a CSV file, follow these steps:

- Go to the Recommend models page in the Algolia dashboard.

- Select a model you want to train.

-

In the Collect events section, click Upload .csv to upload your CSV file with the historical events.

Once you collected enough click and conversion events, Algolia Recommend only uses those events for training the model and discards the events from the CSV file.

You can also re-upload a CSV file. The training only takes the newer file into account and discards the old events.

Export events from Google Analytics 360 with BigQuery# A

If you track user events with Google Analytics, you can export these events with BigQuery. You can then save these events in a CSV file which you can import in the Algolia dashboard to start training your Recommend models.

Before you start#

Before you can export your events from Google Analytics with BigQuery, you must have:

- A Google Analytics 360 account with a website tracking ID.

- Enhanced Ecommerce activated and set up for the website.

- BigQuery Export enabled in GA360 to set up daily imports into BigQuery.

The productSKU from GA360 has to match the objectID in your index.

Set up a BigQuery export#

You can adapt the following query to export the user events required to train Algolia Recommend models.

Replace the following variables:

GCP_PROJECT_ID: the name of the project that holds the Analytics 360 data in BigQueryBQ_DATASET: the name of the dataset that stores the exported eventsDATE_FROMandDATE_TOwith the corresponding dates (inYYYY-MM-DDformat) for a time window of at least 30 days.

You can run this query in the SQL workplace for BigQuery or use one of the BigQuery API client libraries.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

WITH ecommerce_data AS (

SELECT

fullVisitorId as user_token,

TIMESTAMP_SECONDS(visitStartTime + CAST(hits.time/1000 AS INT64)) as timestamp,

products.productSKU as object_ids,

CASE WHEN hits.eCommerceAction.action_type = "2" THEN 'click'

WHEN hits.eCommerceAction.action_type = "3" THEN 'click'

WHEN hits.eCommerceAction.action_type = "5" THEN 'click'

WHEN hits.eCommerceAction.action_type = "6" THEN 'conversion'

END

AS event_type,

CASE WHEN hits.eCommerceAction.action_type = "2" THEN "product_view"

WHEN hits.eCommerceAction.action_type = "3" THEN "add_to_cart"

WHEN hits.eCommerceAction.action_type = "5" THEN "checkout"

WHEN hits.eCommerceAction.action_type = "6" THEN "purchase"

END

AS event_name

FROM

`GCP_PROJECT_ID.BQ_DATASET.ga_sessions_*`,

UNNEST(hits) as hits,

UNNEST(hits.product) as products

WHERE

_TABLE_SUFFIX BETWEEN FORMAT_DATE('%Y%m%d',DATE('DATE_FROM')) AND FORMAT_DATE('%Y%m%d',DATE('DATE_TO'))

AND

fullVisitorId IS NOT NULL

AND hits.eCommerceAction.action_type in UNNEST(["2", "3", "5", "6"])

),

dedup_ecommerce_data AS (

SELECT user_token as userToken,

timestamp, event_name as eventName,

event_type as eventType,

object_id as objectID

FROM ecommerce_data

GROUP BY userToken, timestamp, eventName, eventType, objectID

)

select * from dedup_ecommerce_data