FAQ

What IP address can I use for IP whitelisting?# A

The Crawler uses a static IP address that can be whitelisted if necessary: 34.66.202.43.

What is the user agent of the Crawler (useful for whitelisting)?# A

When fetching pages, the crawler identifies itself with the following user agent: Algolia Crawler/xx.xx.xx (each xx is a number, e.g. 1.10.0).

The version at the end of that user agent changes regularly as the product evolves.

You might want to specify our user agent in your robots.txt file. In that case, you can specify it as User-agent: Algolia Crawler without any version number.

That being said, whitelisting the user agent manually (e.g. through nginx or other custom validation) may require changes to let the crawler fetch pages.

Consider using IP validation instead.

What can I crawl with the Crawler?# A

The Crawler aims at crawling content that is solely owned by the user, whether it’s hosted on its own infrastructure or on a SaaS. Algolia automatically restricts the Crawler’s scope to a list of allowed domains. If you need to add an other domain to your allowed list go the Domains page and verify your domain.

One of my pages was not crawled# A

There are several possibilities for why this might have happened:

- Crawling a website completely can take hours depending on the size: make sure that the crawling operation has finished.

- Some pages may not be linked with each other: make sure that there exists a way to navigate from the website’s start pages to the missing page, or that the missing page is listed in the

sitemaps. If it is inaccessible, you may want to add its URL as a start URL in your crawler’s configuration file. - The page may have been ignored if it refers to a canonical URL, or if it does not match a

pathsToMatchin any of your crawler’s actions, or if it matches anyexclusionPatterns. For more information, checkout the question: when are pages skipped or ignored? - If the page is rendered using JavaScript, you may need to set

renderJavaScripttotruein your configuration (note: this makes the crawling process slower). - If the page is behind a login wall, you may need to setup the

loginproperty of your configuration.

If none of these solve your problem, an error may have happened while crawling the page. Please check your logs using the Monitoring and URL Inspector tabs.

You can also use the URL tester in the Editor tab of the Admin to get details on why a URL was skipped / ignored.

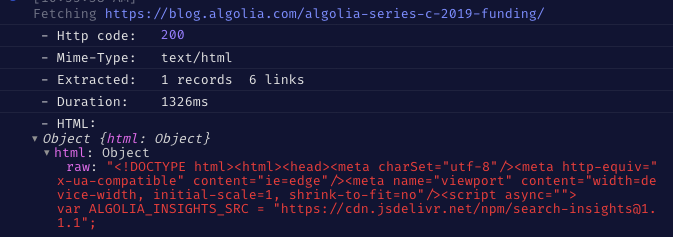

The Crawler doesn’t see the same HTML as me# A

Sometimes, websites behave differently depending on the user agent they receive. You can see the HTML discovered by the Crawler in the URL Tester.

If this HTML is missing information, the last thing to check after trying to debug your selectors is to check whether it can be due to the Crawler’s user agent. You can do this with browser extensions or using cURL. Send several requests with a few different user agents and compare the results.

1

2

3

curl http://example.com

curl -H "User-Agent: Algolia Crawler" http://example.com

curl -H "User-Agent: Mozilla/5.0 (Macintosh; Intel Mac OS X 10.15; rv:71.0) Gecko/20100101 Firefox/71.0" http://example.com

Sometimes, having a “robot” user agent is actually helpful: when they detect a web crawler, some websites return content that works without JavaScript.

How to see the Crawler tab in the Algolia Dashboard?# A

- Make sure that your crawler configuration file was saved on crawler.algolia.com.

- Make sure that you have admin ACL permissions on the indices specified in your crawler’s configuration file.

- Make sure that your

appIdandapiKeyare correct and that they grant admin permission to the crawler for these indices.

When are pages skipped or ignored?# A

At addition time#

Pages skipped at addition time are not added to the URL database. This can happen for two reasons:

- The URL doesn’t match at least one of your actions’

pathsToMatch. - The URL matches one of your crawler’s

exclusionPatterns.

At retrieval time:#

Pages skipped at retrieval time are added to the URL database, retrieved, but not processed. Those are flagged “Ignored” in our interface. This can happen for a number of reasons:

- The

robots.txtcheck didn’t allow this URL to be crawled. - The URL is a redirect (note, we add the redirect target to the URL database but skip the rest of the page).

- The page’s HTTP status code is not 200.

- The content type is not one of the expected ones.

- The page contains a canonical link. Note: we add the canonical target as a page to crawl (according to the same addition-time filters) and then skip the current page.

- There is a robots meta tag in the HTML with a value of

noindex, for example<meta name="robots" content="noindex"/>.

When are records deleted?# A

Launching a crawl completely clears the state of your database. When your crawl completes, your old indices are overwritten by the data indexed during the new run. If you want to save a backup of your old index, set the saveBackup parameter of your crawler to true.

Crawler has SSL issues but my website works fine# A

You may encounter SSL-related errors when indexing your website using the Algolia Crawler, even though your website works fine in a web browser. That’s because web browsers have extra features to handle SSL certificates that the Crawler doesn’t provide.

UNABLE_TO_VERIFY_LEAF_SIGNATURE#

This error generally happens when your website’s certificate chain is incomplete. When an intermediate certificate authority signs your SSL certificate, you need to install this intermediate certificate on your server.

Verify your certificate chain#

To verify the validity of your certificate chain, you can use OpenSSL:

1

openssl s_client -showcerts -connect algolia.com:443 -servername algolia.com

- If the chain is valid, you should see

Verify return code: 0 (ok)at the end, and no errors in the output. - If it’s invalid, you should either get:

Verify return code: 21 (unable to verify the first certificate)at the end.- Or errors in the verifications steps, such as:

Copy

1 2 3 4 5 6 7

CONNECTED(00000005) depth=0 C = FR, L = PARIS, O = Algolia, CN = Algolia.com verify error:num=20:unable to get local issuer certificate verify return:1 depth=0 C = FR, L = PARIS, O = Algolia, CN = Algolia.com verify error:num=21:unable to verify the first certificate verify return:1

You can also do the check online.

Fix your server#

The correct way to bundle the intermediate certificates depends on your web server. What’s My Chain Cert explains how to fix the issue for many popular web servers.

Does the crawler support RSS?# A

Yes, the crawler can discover links present in RSS feeds: it parses and extracts all <link> tags present in RSS files.

You can’t use the content of the RSS file to generate records: the crawler only extract links, for discovery purposes.