Path Explorer

Crawler comes with a set of debugging tools. The Path Explorer is one of these tools.

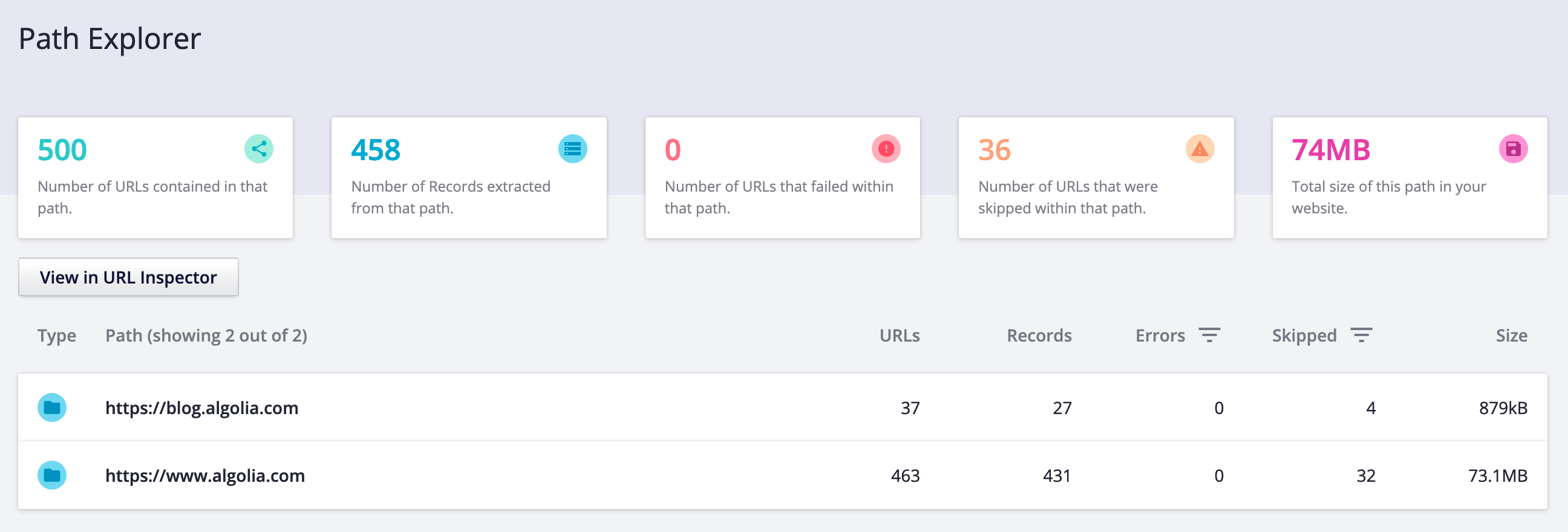

You can use Path Explorer to detect patterns and anomalies. At a glance, it shows you whether specific sections of your site were properly crawled, how many URLs were crawled, how many errors happened, how much bandwidth was necessary, etc.

Path Explorer as a URL Directory# A

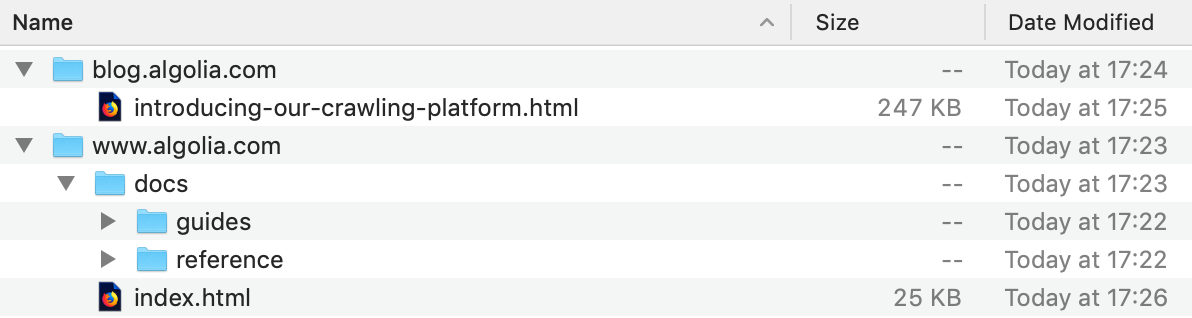

With Path Explorer, you can explore your crawled website(s) as though you were navigating folders on your computer.

Each website is considered a file, and each subpath is considered a folder.

For instance, blog.algolia.com and www.algolia.com could give you this structure:

Identifying issues# A

Path Explorer is effective at identifying specific issues. This section presents these issues alongside their usual solutions.

Identifying ignored websites and paths#

Your pathsToMatch parameter is too restrictive

- Modify your

pathsToMatchpattern(s) - Add a new pattern

Your website is missing links from the pages we explored to the ignored website or path

- Improve your website by adding links between sections

Your startUrls parameter is missing a first page to discover this website or path

Identifying crawled websites and paths which should be excluded#

Your pathsToMatch parameter might be too permissive

- Make your

pathsToMatchpatterns more specific

You’re missing a pattern in exclusionPatterns

- Add the website or path to

exclusionPatterns

Identifying websites and paths with numerous errors#

We divide errors into three types:

- Website errors: Some HTTP codes, wrong content type, network error, timeout, etc.

- Contact the team responsible for the website and ask them to investigate the recurring errors

- Configuration errors: Runtime errors, invalid JSON, extraction timeout, etc.

- Fix your crawler’s configuration to prevent these errors

- Internal errors: These indicate a failure resulting from one of our internal services

- Contact us at support+crawler@algolia.com if the issue persists

Identifying websites and paths that are consuming lots of bandwidth#

If you’re crawling frequently, bandwidth costs might go up quickly.

- Make sure you’re only crawling what’s necessary. Note, ignored pages are also crawled. If you have a lot of ignored pages, consider setting stricter

pathsToMatchor addingexclusionPatterns. - Decrease your crawl frequency in the

scheduleparameter to proportionally reduce bandwidth costs

If there are specific parts of your website you’d like to crawl more frequently than others, please contact us at support+crawler@algolia.com.